Paywall A/B Testing

Paywall A/B Testing

Updated: July 27, 2023

4 min read

Paywall A/B Testing

Paywall A/B testing is a research method that involves conducting a randomized controlled experiment to assess the effectiveness of mobile app paywalls.

What’s A/B testing for mobile app paywalls

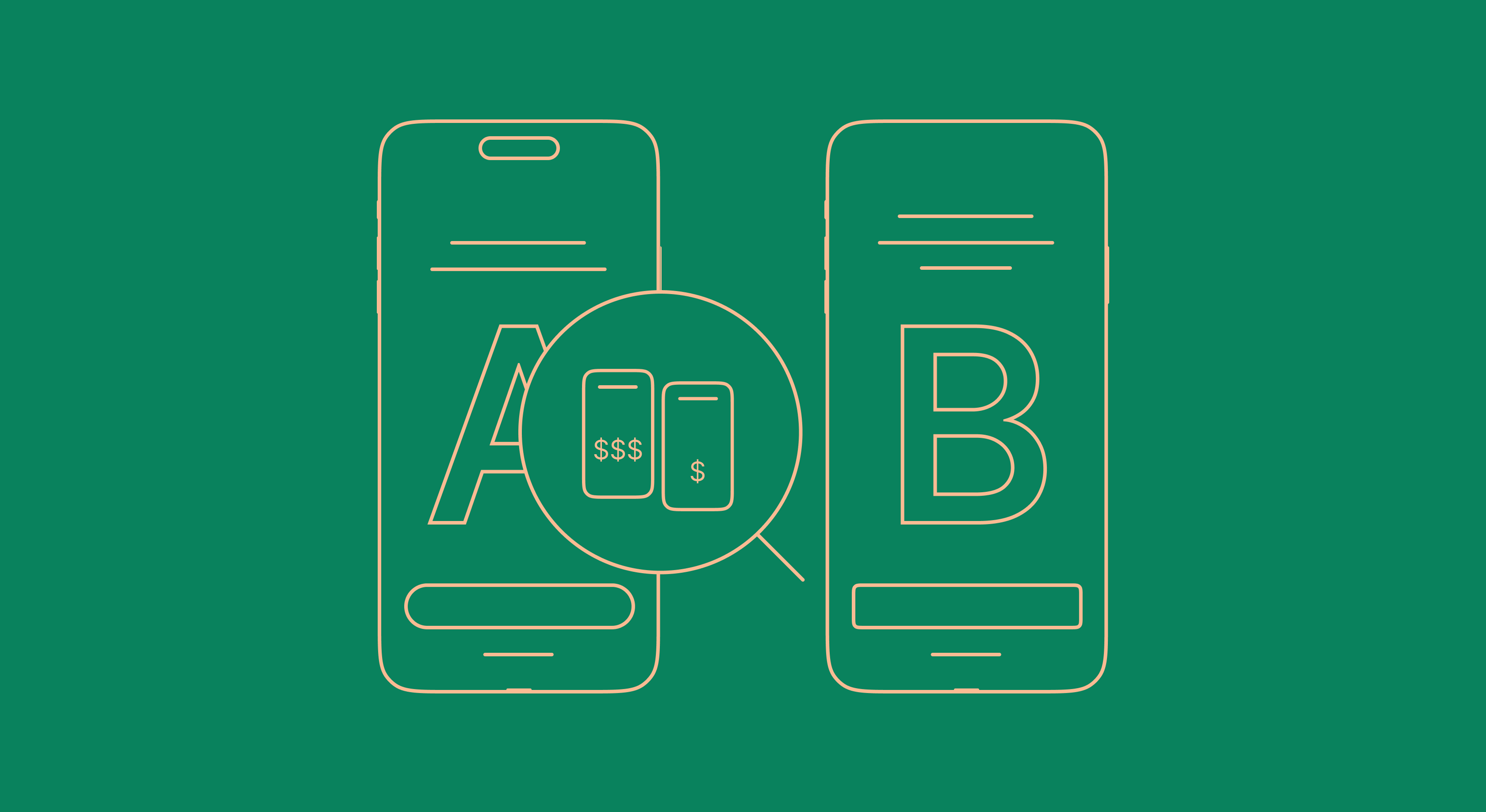

The A/B testing process typically involves randomly dividing users into two or more groups, presenting each group with a different version of the paywall, and measuring the results over a period of time.

With paywall A/B testing app publishers can evaluate the effectiveness of different paywalls by tracking metrics such as conversion rate, revenue per user, and retention rate, and grow MRR (check how Social Kit doubled MRR with A/B testing).

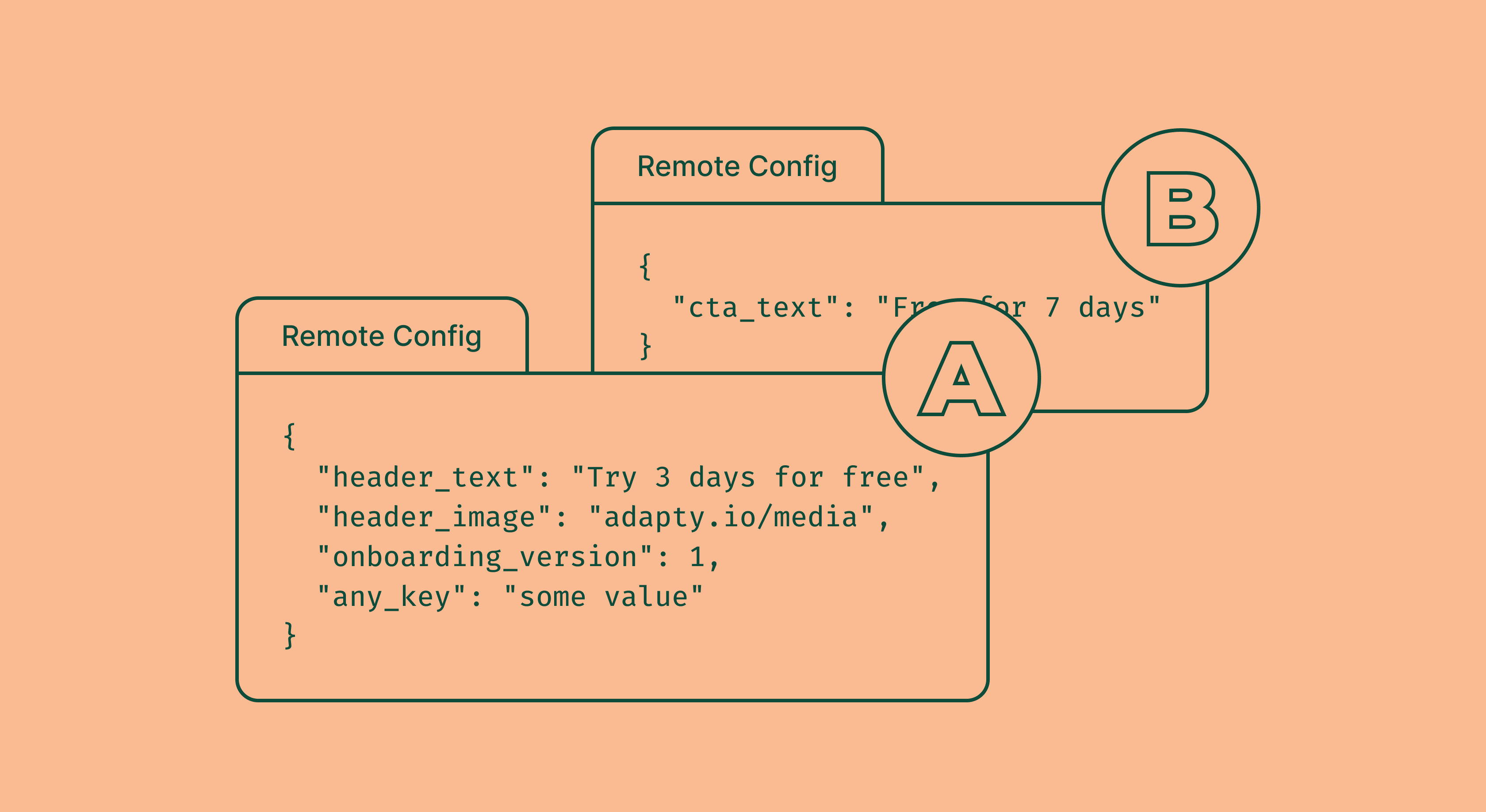

Many SaaS platforms use A/B testing and build their solutions empowered by this statistical method for different business purposes (i.e. Squarespace A/B testing for web pages, Amplitude A/B testing for features or experiences on a website or app, iOS 15 A/B testing in the App Store, etc.). At Adapty, we designed a dedicated paywall A/B testing tool to create multiple paywalls, easily set up and run an A/B testing experiment and see detailed statistics on user behavior to make data driven-decisions on paywall optimization.

Despite many advantages of A/B testing, mobile app publishers approach A/B testing with caution due to the challenges connected to creating variations, managing the experiment, collecting large amounts of data, and gathering insights for future tests. With Adapty A/B testing solution you can create multiple paywalls without involving a designer or developer, easily set up a test, and see the detailed performance metrics for each variation – all in one dashboard.

How to create paywall A/B examples and run app paywall A/B testing

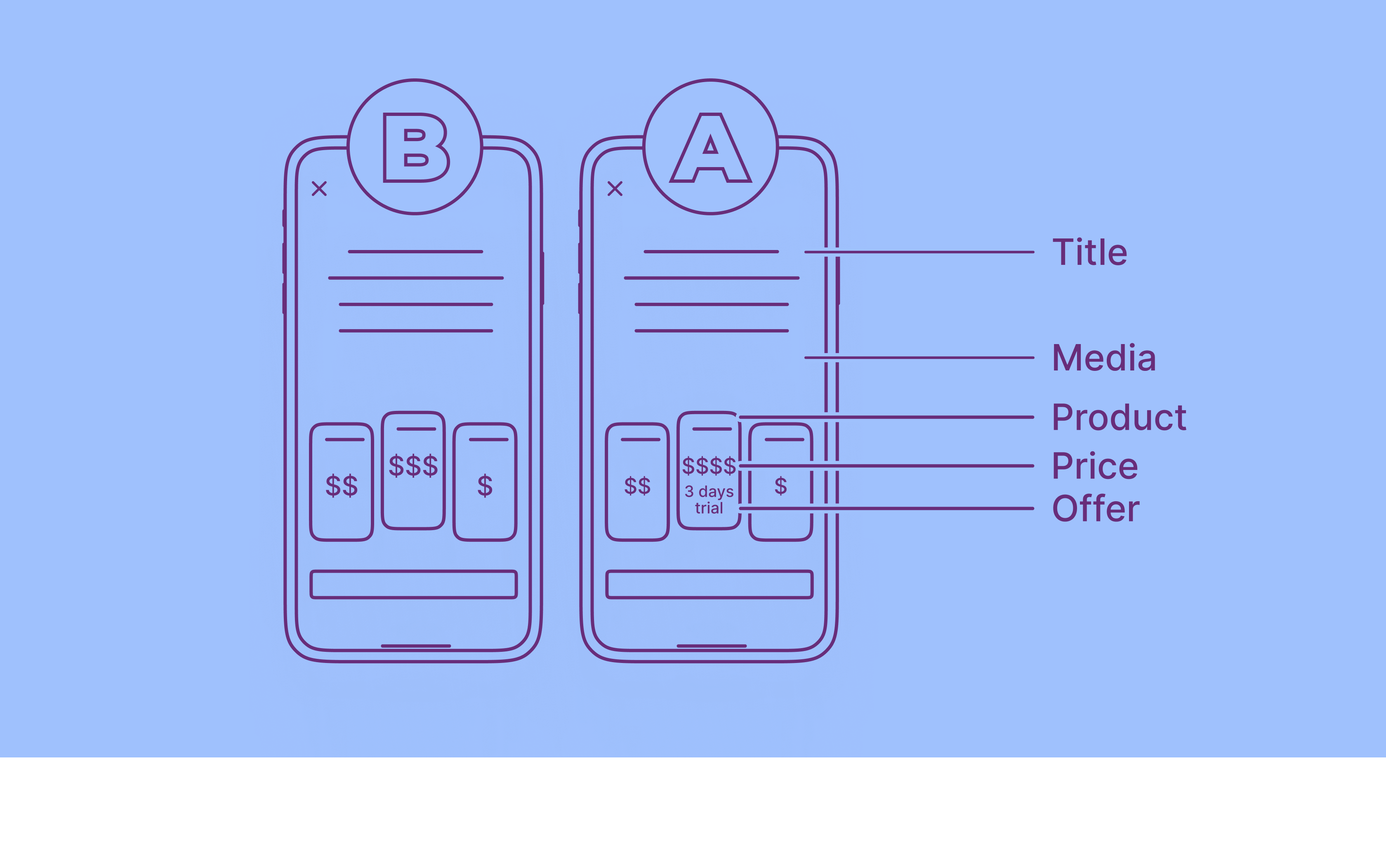

To run an A/B test on your paywall, you compare two versions – the original (variation A) and the new one (variation B). You can make new versions or use the current one. Test one thing at a time, like prices or buttons, to see which is better.

You can test for all users or some. Split the users equally (50/50) or with different weights (70/30, 80/20, etc.). The weight decides how many people see each version. For example, if paywall A has 30% weight and paywall B has 70%, then 30% of people see A and 70% see B.

To get reliable results from the test, it’s important to reach statistical significance. A/B tests should have at least 90% or 95% statistical significance. This means the change will either help or hurt the paywall. The test should run until it reaches the necessary statistical significance. Adapty can calculate this for you when you set up the test. Follow a step-by-step guide to launch a paywall test.

Recommended posts