Most teams treat onboarding like a project. Build it, test it, launch it, move on.

That mindset costs you money every single day.

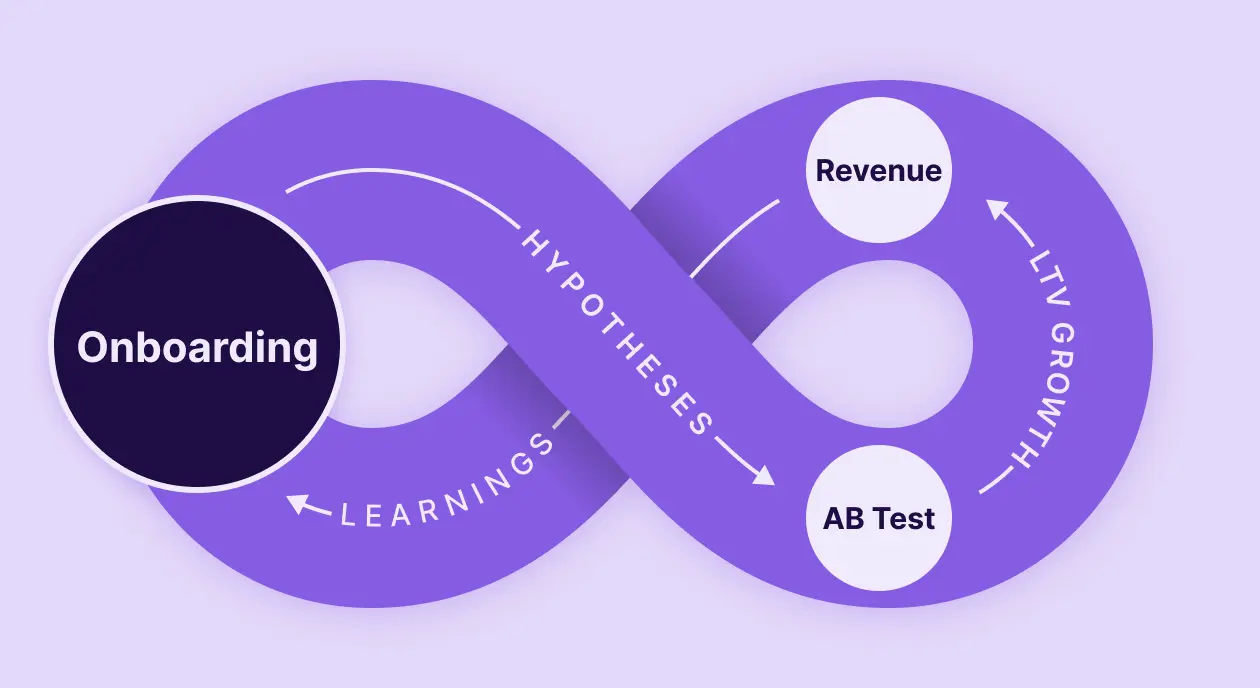

High-performing apps don’t “finish” onboarding. They treat it like paid acquisition: something that requires constant testing, iteration, and optimization. The question isn’t whether to test onboarding. It’s how often, what to prioritize, and how to avoid testing yourself into chaos.

Who should own onboarding?

Before we talk about testing, let’s address ownership – because if nobody owns onboarding, nobody optimizes it.

In most B2C subscription apps, onboarding sits with the growth manager or growth PM. This is the person responsible for in-app monetization: pricing strategy, paywall optimization, and yes, onboarding flows.

This makes sense. Onboarding is part of your conversion funnel, not a product feature. It lives between acquisition (owned by your UA manager) and activation (owned by the product). The growth manager connects those dots.

In larger products with multiple features or sub-products, each product manager typically owns onboarding for their specific flow. The CPO or Head of Product sets overall direction, but individual PMs are responsible for testing and improving their onboarding experiences.

The key principle: whoever owns go-to-market for a feature should also own onboarding for that feature. If someone’s responsible for getting users to discover and try something, they should also be responsible for converting that intent into activation or revenue.

If your onboarding doesn’t have a clear owner, fix that first. Testing without ownership is just chaos.

How often should you change onboarding?

The short answer: more often than you think.

The idea that you’ll find the “perfect” onboarding and run it forever is tempting. It’s also unrealistic.

In B2C products, especially, onboarding needs frequent updates. Trends change. User expectations evolve. Competitors introduce new patterns that become table stakes. That interaction where users press their finger on the screen for 5 seconds to “commit” to a goal? Nobody used it a few years ago. Then someone tested it, conversions jumped 3%, and now it’s everywhere in habit apps.

Three percent might not sound like much. But when it touches every single user who installs your app, 3% compounds into meaningful revenue.

You can’t predict what will work. Ideas that seem brilliant fail in testing. Small tweaks that feel insignificant drive double-digit lifts. The only way to know is to test.

That doesn’t mean you need to change onboarding every week. A working version can run for one, two, or even three months, depending on your resources. But if you haven’t touched onboarding in six months, something’s wrong.

Testing onboarding without breaking everything

Here’s the problem with testing: if you change onboarding, pricing, and product features simultaneously, you have no idea what caused your results.

Testing must be sequential, not parallel.

Run one major test at a time. Change onboarding this month. If that works, lock it in and test pricing next month. Then come back to onboarding later with a new hypothesis.

This requires prioritization. If you’ve run 10 onboarding tests but haven’t touched pricing in months, pricing might give you a 10% lift while another onboarding tweak only gives you 2%. Test pricing first.

But if you’ve exhausted your pricing tests and returns are diminishing, onboarding is a great place to circle back – especially if you’ve never tested it seriously. It’s realistic to see 10-15% lifts, sometimes even 20%, from onboarding optimization when you’re starting from an unoptimized baseline.

The 80/20 testing rule

Most small apps run 50/50 A/B tests. Half the traffic sees version A, half sees version B.

Large apps rarely do this. When you’re getting 10,000+ installs per day, a bad test costs real money.

Instead, run 80/20 or 90/10 splits. Keep 80-90% of traffic on your proven control version and send 10-20% to the new variant. You’ll still reach statistical significance, but you’re not risking major revenue loss if the test bombs.

This is especially important for aggressive tests – completely new onboarding structures, radical pricing changes, or counterintuitive ideas that might fail spectacularly.

Always keep your control running. Traffic sources change. Attribution shifts. Audience composition evolves. If you’re not running a proper A/B test, you can’t separate signal from noise.

Localization: The lowest-hanging fruit

Let’s talk about the single biggest onboarding opportunity most apps ignore: localization.

Not just translation. Full localization – language, imagery, narratives, value propositions.

If you’re showing English onboarding to French-speaking users, your conversion rate is guaranteed to be lower. This isn’t something you need to A/B test. It’s an axiom. Same for any other language.

Apps earning $100K-$200K per month from non-English regions localize their creatives, onboarding, and paywalls. Within two weeks, conversion jumps ~30%.

Those are massive numbers for what’s often a straightforward change.

Step one is translation. Today, this has never been easier. Tools like ChatGPT and other LLMs handle translations extremely well. Human review is ideal, but even pure machine translation performs significantly better than no localization at all.

But you can go further. Test narratives. What a “happy family” looks like differs by country. What a “healthy meal” looks like differs. What a “beautiful person” looks like differs. The value you emphasize and the problems you highlight should vary by region.

In B2B, English is more accepted globally, but localization still helps. Often, you don’t even need to localize your entire product – localizing onboarding and landing pages alone has a strong impact.

In B2C, launching in new markets is often straightforward: run ads in a new country, find localized creatives, and traffic starts flowing. If those users land in a non-localized app, you’re immediately losing 20-30% of potential conversion.

This is why onboarding is never “final.” There are dozens of languages, hundreds of regions, and you can almost always find a better solution somewhere. Then, three months later, a new pattern emerges or you launch a new feature that needs introduction. And you iterate again.

What to focus your tests on?

So what should you test?

1. Structural changes:

- Onboarding length (add screens vs. remove screens)

- Question types (multiple choice vs. open-ended vs. interactive)

- Personalization logic (how you segment users based on answers)

- First paywall placement (after screen 5 vs. after screen 10)

2. Content and messaging:

- Value propositions by segment

- Social proof placement and format

- Imagery and visual style

- Localized narratives

3. Interaction patterns:

- New engagement mechanics (finger press, progress bars, commitments)

- Friction points (strategic pauses vs. fast flow)

- Transitions and animations

The mistake most teams make is testing surface-level stuff – button colors, headline copy, image swaps – instead of structural changes that actually impact conversion.

Test big things first. Once you’ve exhausted major structural improvements, then optimize the details.

Can you over-test onboarding?

Yes, but the risk isn’t testing too much. It’s testing too many things simultaneously and losing track of what works.

Some teams test onboarding so aggressively that they conclude it’s better to remove it entirely. I’ve never actually seen that work. Products rarely get simpler over time – usually the opposite.

I have seen cases where simplifying onboarding improved performance, usually by cutting useless screens. If fewer than 90-95% of users complete onboarding, something’s wrong. Sometimes the fix is shortening it. Other times, lengthening it works better.

But fully removing onboarding means users see a paywall immediately. That doesn’t work. You still need to show them why the product solves their problem.

Even free apps benefit from basic guidance. No onboarding is almost always worse than bad onboarding.

When onboarding needs to change completely

Sometimes external factors force onboarding changes:

New monetization models: If you switch from paid to subscription, onboarding needs a complete overhaul. For new users, this doesn’t matter – they see the product as new. For existing users, offer a grace period and explain why the change happened: ongoing development, server costs, and continuous improvements.

The message to existing users: subscriptions enable constant product evolution. In return, they get regular updates, new features, and discounts or extended trials.

New audience segments: If your product expands beyond its original niche and attracts a very different audience, segment immediately. Ask users upfront what they want to use the product for, then show different onboarding flows and paywalls based on their answer.

Even if the core product stays the same, the value proposition differs by segment. Onboarding should highlight what matters to each group.

Metrics will immediately show where new users drop off or fail to activate. That’s your signal to create dedicated onboarding paths.

Offboarding matters less than you think

One last topic: offboarding and winbacks.

Offboarding matters, but less than onboarding – especially in B2C.

In B2C, improving the start of your funnel by 1% usually has a much bigger impact than doubling offboarding conversion. Only ~5% of users ever subscribe, so optimizing acquisition and activation affects a much larger base.

That said, winbacks work. Discounts, free months, lifetime offers – all of these can convert. Email winbacks typically convert 5-10%, which is meaningful because they’re very cheap to execute.

Even the 95% of users who never subscribed can be re-engaged via email or push. A 0.5-1% conversion rate there is worthwhile because the audience is huge.

In B2B, churn works differently. Users rarely leave silently. If price is an issue, they’ll negotiate. If they’re leaving, there were usually warning signals earlier – reduced usage, feature drop-off, support tickets. Proactive intervention during those moments matters more than offboarding flows.

Churn in B2B is generally much lower than in B2C, at least in subscription products.

But the classic story still applies: when you try to cancel your mobile plan, suddenly they offer everything at half price. It works because the cost of retaining a customer is almost always lower than acquiring a new one.

High-velocity testing vs. Set-it-and-forget-it

| Approach | High-velocity testing | Set-it-and-forget-it |

|---|---|---|

| Testing frequency | Monthly or quarterly tests | Once at launch, rarely revisited |

| Localization | Continuous expansion into new languages/regions | English only or minimal translation |

| Ownership | Clear owner (growth PM, product manager) | Shared responsibility (nobody owns it) |

| Iteration cycle | Sequential tests with clear hypotheses | Random changes without measurement |

| Results | 10-20% annual conversion improvement | Stagnant or declining conversion over time |

| Mindset | Onboarding is part of growth infrastructure | Onboarding is a one-time project |

What high-performing teams do differently

Teams that consistently improve onboarding conversion:

- They assign clear ownership. Onboarding has a named owner who’s accountable for testing and results.

- They test sequentially. One major change at a time, with proper A/B testing and statistical significance.

- They prioritize localization. Translation alone often delivers bigger lifts than months of copy optimization.

- They protect downside risk. Large apps use 80/20 splits to test aggressively without catastrophic revenue loss.

- They never stop. Onboarding optimization is continuous, not a project with an end date.

The teams that struggle treat onboarding like a feature: build it once, ship it, move on. Then they wonder why conversion stagnates while competitors pull ahead.

The bottom line

Onboarding is never finished because user expectations evolve, markets change, and new patterns emerge constantly.

The apps that win treat onboarding like paid acquisition: something that requires continuous investment, rigorous testing, and relentless optimization.

If your onboarding hasn’t been touched in six months, start testing. If you’re not localized beyond English, fix that first – the returns are immediate and massive. If nobody owns onboarding at your company, assign it today.

Small improvements compound. A 2% lift this quarter, 3% next quarter, 5% from localization – these stack on top of each other and touch every user who installs your app.

That’s the leverage. Use it.

Want to test onboarding flows without waiting for App Store review? Adapty’s Onboarding Builder includes one-click auto-localization and lets you launch experiments without code – so you can move fast and iterate based on real conversion data.

Based on a conversation with Kirill Potekhin (CPO at Adapty) on the Podlodka podcast.