Performance marketing for subscription apps is tougher than ever. Rising costs, black-box algorithms, and privacy restrictions have made it harder to know if your ad spend is driving real value. One critical lever still in your control? Signal choice.

Ad platforms like Meta and TikTok rely on in-app events to optimize campaigns. The wrong signal leads to wasted spend and under-delivery. The right one can transform results. But with so many possible events and combinations, how do you know which works best?

This post shares a data-driven framework for evaluating signals scientifically, helping you find the optimal ad signal for your app. It covers:

- What ad signals are.

- The problems associated with the signals typically used by subscription apps.

- A framework designed to help select a more effective optimization signal.

TL;DR: Though most subscription apps rely on signals like trial starts to optimize ad campaigns, these are not the best choice. Such early events don’t always correlate with paying subscribers, leading to wasted spend and higher customer acquisition costs (CAC).

You’ll need to enhance your signal with an additional event(s).To decide on the specific events, we demonstrate a framework based on machine learning principles. This framework, for instance, can help you determine whether the “trial + 3 content views” combination is a better predictor than the “trial + completed onboarding” one or not.

What are ad signals and why do they matter?

Ad platforms have become increasingly algorithmic over the past few years. Marketers no longer need to micromanage targeting or placements. Instead, platforms like Meta, TikTok, and Google Ads now handle these decisions automatically, using their machine learning systems to find the best users for your goal.

At the center of this system is your goal event: the in-app action you tell the platform to optimize for. This goal event is often referred to as the “signal” or sometimes the conversion event. It’s the data point that drives the platform’s algorithms to learn who is likely to take similar actions and allocate budget accordingly. Examples include:

- completed on-boarding

- trial_started

- started_subscription

Given the shift to algorithmic bidding, choosing the right signal is critical. A strong, well-chosen signal allows the platform to quickly identify high-quality users and deliver efficient results. A weak or misaligned signal, on the other hand, can lead to wasted spend, poor delivery, and campaigns that fail to scale.

Why can’t a ‘high-paying subscriber’ be our signal

So, we now know why signals are so critical for ad platforms. The goal we really care about is paying customers or those who stick around. Can we just select those as our signals? Not quite. Here’s why:

Attribution windows and platform visibility

Ad platforms have limited attribution windows for conversion events. For example, Meta Ads offers options of 1-day, 7-day, or 28-day attribution. On iOS, this visibility diminishes quickly. Meta’s Aggregated Event Measurement (AEM) reduces attribution to 1-day or 7-day, but the longer window often loses user matching accuracy due to probabilistic methods. SKAdNetwork (SKAN) restricts attribution even further to just 48 hours. While SKAN 4 introduces extended windows, they remain complex and outside the scope of this post.

Signal volume requirements

Platforms like Meta require enough conversion signals per day per objective to effectively train their algorithms. If the signal (e.g., paid subscriber) is too sparse, campaigns may struggle to exit the learning phase or scale effectively.

Because of these constraints, most subscription apps end up optimizing for the trial event.

Pitfalls of the trial event

As a result of attribution window limitations and signal volume requirements, most subscription apps choose to optimize for the trial start event. This makes sense because the majority of trial starts happen within the first day after install, fitting within attribution windows like SKAN’s 48 hours or AEM’s 1 to 7 days.

However, there are pitfalls. The conversion rate from trial start to paid subscription can vary widely across audiences and app categories. For example, younger users are often more comfortable signing up for trials and cancelling them before they convert. And in the “Photo & Video” category, only around 18% users buy the subscription after the trial (compared to 39% in “Health & Fitness”), per Adapty’s data.

Since Meta’s algorithm will only optimize to the signal you tell it to, it will optimize delivery to those who start trials and not necessarily pay. This can lead to wasted spend and poor subscriber quality.

Upgrading your signal from trial to ‘qualified trial’

You’ve probably heard these recommendations before:

- “Optimize for events that correlate to business goals.”

- “Find your app’s ‘aha moment.’”

- “Use qualified trial events as proxies for conversion.”

Moving to a deeper ‘trial + behaviour event’ certainly improves quality and conversion rate. However, it has its challenges:

- You need to consider the volume of signals for this qualified trial event.

- But what if this is missing actual converters?

- And which event combination do you choose?

This is where many teams get stuck, balancing the need for early signals with ensuring they are meaningful enough to predict revenue.

A framework for evaluating which signal is better

We covered the value of choosing a ‘qualified trial’ event as well as the challenge of defining it. This challenge looks similar to a classification problem in machine learning. Think of it like teaching an algorithm to sort apples from oranges; each signal acts as a prediction model for future subscribers.

If we treat each event or signal as a predictive “model,” we can evaluate these using standard ML metrics:

- Prediction Volume

- Precision

- Recall

We’ll run through each metric.

Example

Let’s start with an example we’ll use throughout each of these evaluation metrics. Assume we care about finding the best signal that correlates with (or predicts) who’s likely to become a paying subscriber.

- Imagine 300 users install your app.

- Of those, 100 start a trial within the first 24 hours.

- But only 25 go on to convert to paid subscriptions.

You might be considering the following event or event combination as your signal for ad platforms, and we’ll use this framework to choose which one is best:

- Optimization Signal A: Trial Started (100 users)

- Optimization Signal B: Trial + Completed Onboarding (70 users)

- Optimization Signal C: Trial + 3x Content Views (30 users)

This example illustrates how each signal has different volumes and potential predictive power. It’s like testing which early user behaviors give the algorithm the clearest hints about who will subscribe.

We now need to evaluate them scientifically.

These signals are combined on the event tracking SDK/S2S to output a single event, such as ‘qualified trial event,’ and forwarded to ad platforms.

Prediction Volume

For each of these metrics, we’ll use a 4×4 grid of predictions vs actuals, converters vs non-converters (those who were predicted or actually did become a paying subscriber or not).

Prediction volume is the total number of times the signal fires (or signal event occurs).

Example for Signal A (trial only)

| Predictions | |||

| Converters | Non-Converters | ||

| Actuals | Converters | 25 (TP) | 0 (FN) |

| Non- converters | 75 (FP) | 0 (TN) | |

| 100 Total Signals | |||

Formula: Total signals/events occurred (True positives + False positives)

Ad platforms require a minimum number of signals per week to optimize effectively. For Meta Ads, for example, it’s recommended to have at least 50 conversions per ad set per week.

Precision

Precision calculates those who triggered the event and the converted. This measures how closely the signal aligns with actual business outcomes. It can also be considered the actual conversion rate for each signal to goal outcome (trial to paid rate).

Example for Signal A (trial only)

In this example, it predicts 100 conversions (signals) of which 25 actually convert to paid.

| Predictions | |||

| Converters | Non-Converters | ||

| Actuals | Converters | 25 (TP) | 0 (FN) |

| Non- converters | 75 (FP) | 0 (TN) | |

| 25% Precision | |||

Formula: True Positives / (True Positives + False Positives)

Recall

This is one that often gets overlooked. Of everyone who converted, how many were captured by this signal? This ensures you’re not overlooking actual converters. For example, you may have a signal with high precision (or conversion rate), but it can miss those who convert without going through your specific event combination.

Formula: True Positives / (True Positives + False Negatives)

Example for Signal A (trial only)

In this example, it predicts 100 conversions/signals, and although it gets only 25 conversions right, zero conversions are missed.

| Predictions | ||||

| Converters | Non-Converters | |||

| Actuals | Converters | 25 (TP) | 0 (FN) | 100% Recall |

| Non- converters | 75 (FP) | 0 (TN) | ||

Now, let’s compare it to a different scenario.

Example for Signal C (Trial + 3x Content Viewed Only)

In this example for Signal C, only 30 signals are fired, of which 10 convert. Therefore, it has higher precision; however, it misses signals for 15 other users who converted, meaning lower recall.

| Predictions | ||||

| Converters | Non-Converters | |||

| Actuals | Converters | 10 (TP) | 15 (FN) | 40% Recall |

| Non- converters | 20 (FP) | 55 (TN) | ||

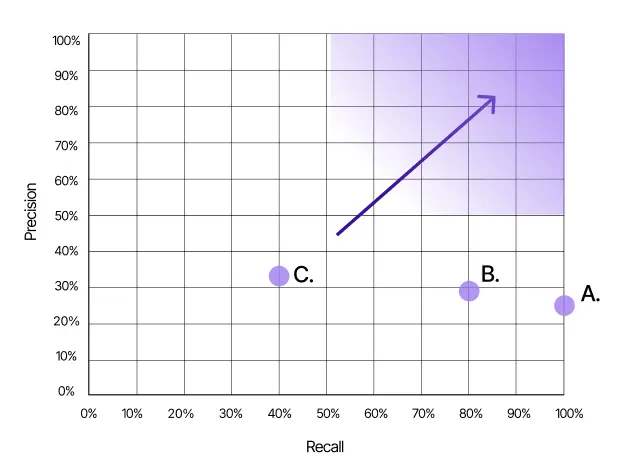

Comparing different signals

Bringing it all together from our examples, we can now compare these different signals (event combos) for our goal event of paying subscribers:

| Signal | Volume | Precision | Recall |

|---|---|---|---|

| A. Trial Started | 100 | 25% | 100% |

| B. Trial + Completed Onboarding | 70 | 29% | 80% |

| C. Trial + 3x Content Views | 30 | 33% | 40% |

Key Insights:

- Signal A captures all converters (100% recall) but has poor precision.

- Signal C is highly precise but misses most converters.

- Signal B strikes the best balance between precision and recall.

Finding the sweet spot

The optimal signal balances high precision and recall while maintaining sufficient volume.

- High Precision, Low Recall: Your ads may overlook large portions of your audience, limiting scale.

- High Recall, Low Precision: The algorithm wastes money on low-quality users.

- Balanced Signal: Enables ad platforms to optimize effectively, scaling spend while staying efficient.

Visualizing these on a Precision vs Recall graph can make trade-offs clearer.

F1 Score

To help balance precision and recall in one number, you can use the F1 Score.

The F1 Score works like a combined scorecard, balancing both accuracy and coverage so you’re not over-optimizing for one at the cost of the other.

Formula: 2 x Precision x Recall / (Precision + Recall)

| Signal (Event / Model) | Signal Volume | Precision | Recall | F1 Score |

|---|---|---|---|---|

| A. Trial Started | 100 | 25% | 100% | 0.40 |

| ✅ B. Trial + Completed On-boarding | 70 | 29% | 80% | 0.43 |

| C. Trial + 3x Content Views | 30 | 33% | 40% | 0.36 |

The signal with the highest F1 score means the best balance, and that’s a good starting point to consider against signal volume. In this example, the app could set up Signal B as a new event called ‘Qualified Trial’ and forward this to ad platforms.

Just remember that this balance is dynamic. As your product evolves, re-evaluate signals regularly to keep up with changes in user behavior and platform algorithms.

There are more advanced tunings of this F1 metric where you can have F0.5 or F2, which gear more towards precision or recall if either is more important to you, but F1 is the middle ground.

Wrapping up

Choosing the right ad signal is one of the last real levers left for marketers. By combining a qualified trial approach with a data-science-inspired evaluation framework, you can stop guessing and start optimizing scientifically.

Start simple: shortlist early-stage signals and compare them using precision, recall, and F1 Score. Over time, this discipline can drive more stable delivery and stronger ROI.

The next evolution of this is machine-learning-powered predictive signals. In this approach, the signal becomes a “predicted subscriber” event. This event is dynamically calculated per user by taking a multitude of variables into account to predict their probability of converting. The signal then fires only when this probability crosses a certain threshold.

This would unlock far greater precision, as ad platforms can optimize not just for fixed events but for the users most likely to bring long-term value.

Shumel Lais is Co-Founder of Day30, which helps subscription apps with paid acquisition by bridging performance marketing and data science. They are building tech to help subscription apps go from static signals to more dynamic predictive signals.